Hearing everyone’s opinion of the cost-efficiency and value of multicloud deployments is fun. Most people don’t get it right—more from misperceptions and groupthink than anything else. I’m sorry to be the guy who comes along and crushes these myths, but somebody must do it.

Myth 1: Multicloud means no lock-in. I understand this assumption. We prevent lock-in with a single public cloud provider if we have many different cloud brands. Right? That is not the case. I’ve covered this issue before, so I won’t belabor it now. However, it’s the No. 1 myth I hear, and I have some bad news for those of you who still believe this is true.

It’s simple: Using the native APIs of a single cloud provider causes lock-in. Services such as security, storage, governance, and even finops APIs you leverage on a single provider are not portable to another provider unless you change some code to interact with another native cloud service, on the terms of that service. This is the definition of lock-in, and it does not matter how many other cloud providers you have in your multicloud portfolio, this limitation still exists.

Myth 2: Multicloud is more cost-effective. This one is complicated. Multicloud will be more costly than a single cloud deployment, and for obvious reasons. You have more complex security and cloud operations, and you must keep different skills around to run a multicloud effectively. This runs into more money and risk, a natural result of the additional architectural complexity in play with more moving parts.

For most businesses, the selling point is agility and the ability to use best-of-breed cloud services to return innovation value. We leverage a multicloud that is more costly to build, deploy, and operate if the technology that is the best fit to solve a specific problem justifies the additional expense. If multicloud does not do that, it should not be deployed.

Myth 3: Multicloud deployments should not include traditional systems. This one is a judgment call. Just like everything else around multicloud deployment, the bummer consultant’s response is, “It depends.”

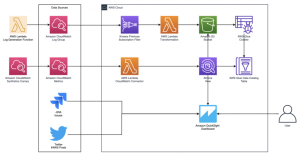

Again, we should consider including all systems—legacy, edge, software as a service, and even small industry-specific clouds—as part of a multicloud infrastructure. We deal with all systems using a common control plane, which many call a supercloud or metacloud. This means we solve security, operations, data management, and application development and deployment problems for all systems, not just major public cloud providers.

Suppose you don’t move in this direction. In that case, you miss an opportunity to get most of your systems under the same management and security frameworks, thus not having to focus on whatever native tools are used to deal with technology within silos. Of course, this makes multicloud deployments more complex, complicated, and costly. But if we’re attempting to solve these problems in holistic and scalable ways, we might as well extend what’s working with our multicloud to other platforms as well.

This may be the most misunderstood of all the myths I’m looking to bust, but it needs to be called out. Of course, you may have good reasons not to include other cloud and non-cloud systems within your multicloud deployment framework, and that’s fine. It’s a question that needs to be asked and is often ignored.

I suspect I’ll be updating this list as we get deeper into multicloud deployments, but for now, this is my story and I’m sticking to it.

Copyright © 2023 IDG Communications, Inc.

Originally posted on March 24, 2023 @ 1:31 pm