Artificial Intelligence

‘Move fast and build things’ versus ‘acceleration without guardrails’ at Broadband Breakfast Live Online.

WASHINGTON, March 28, 2024 – The rapid advancement of artificial intelligence has sparked a debate between those who advocate for swift adoption and those who urge caution and regulation.

This tension was at the forefront of a live online panel discussion hosted by Broadband Breakfast on Wednesday.

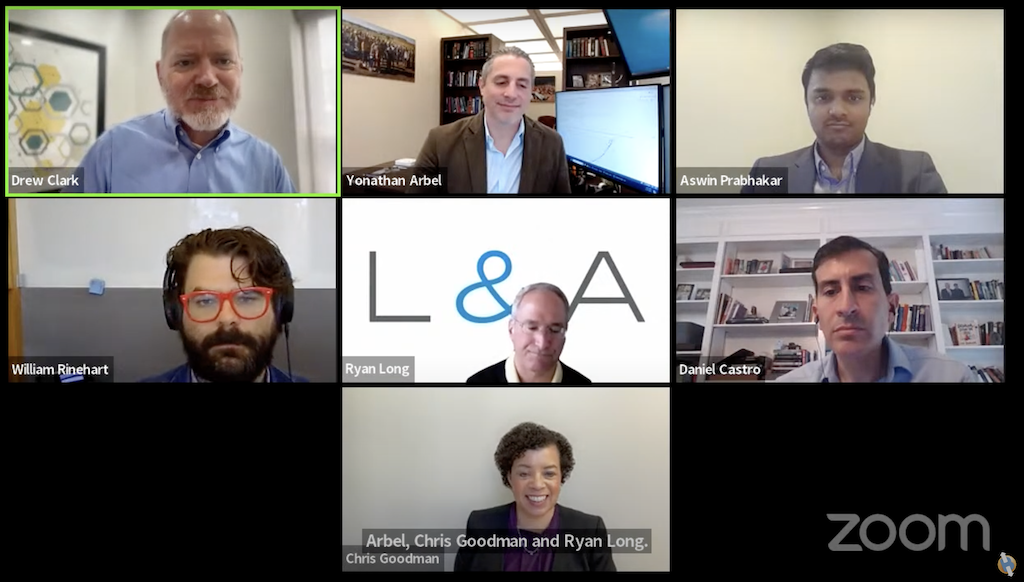

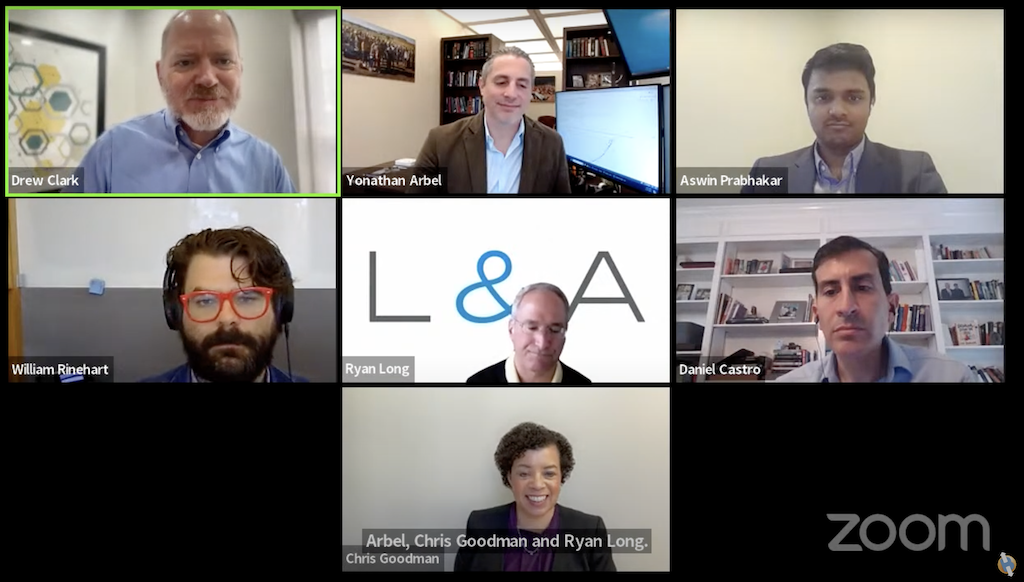

The event, moderated by Drew Clark, editor and publisher of Broadband Breakfast, featured experts with differing views on the pace of AI development and deployment. Daniel Castro from the Information Technology and Innovation Foundation strongly advocated for accelerating AI adoption in the United States, arguing that it is crucial for the country to remain competitive with nations like China.

Broadband Breakfast on March 27, 2024 – Generative AI and Congressional Action

How might regulation of artificial intelligence factor into the national storyline?

Turned the much-criticized slogan of Facebook on its head, Castro said, “we should be moving fast and building things.”

On the other hand, panelists such as Yonathan Arbel from the University of Alabama and Chris Chambers Goodman from Pepperdine Caruso School of Law emphasized the need for caution and regulatory steps to address potential risks and harms associated with AI technologies.

“Acceleration without guardrails or training is more dangerous than innovative,” Goodman said, calling for substantive safeguards to manage AI’s societal impacts.

The release of the National Telecommunications and Information Administration’s AI Accountability Report earlier on Wednesday further fueled the debate.

NTIA Releases Accountability Report, Calls for Independent Audits of AI

Davidson said that the NTIA looked to financial auditing as inspiration for the new proposed rules.

The NTIA’s report, which calls for auditing high-risk AI systems, prompted reactions from several of the panelist. Those adopted a more “decelerationist” view saw the report as a step in the right direction.

Ryan Long, a tech and media attorney who is a non-residential fellow at the Stanford Law School’s Center for Internet and Society, differentiated between issues raised by generative AI such as OpenAI’s ChatGPT, and more “traditional” AI.

He argued for a balanced approach that uses AI to supplement human labor rather than fully replacing it. “There’s a cost to being this bullish with technology,” Long remarked.

The discussion also touched on the role of state legislation and European regulatory models. Will Reinhardt, a senior fellow at the American Enterprise Institute, said that a patchwork of state laws would complicate compliance for AI developers.

Ashwin Prakar, a policy analyst at ITIF, raised concerns over the European Union’s approach, which he said would potentially inhibit innovation.

Panelists

- Daniel Castro, Vice President, Information Technology and Innovation Foundation

- Aswin Prabhakar, Policy Analyst, Information Technology and Innovation Foundation

- Will Rinehart, Senior Fellow, American Enterprise Institute

- Yonathan Arbel, Professor of Law, University of Alabama

- Chris Chambers Goodman, Professor of Law, Pepperdine Caruso School of Law

- Ryan E. Long, Principal, Long & Associates PLLC

- Drew Clark (moderator), Editor and Publisher, Broadband Breakfast

Broadband Breakfast on March 27, 2024 – Generative AI and Congressional Action

How might regulation of artificial intelligence factor into the national storyline?