In today’s rapidly evolving technological landscape, generative AI, and especially Large Language Models (LLMs), are ushering in a significant inflection point. These models stand at the forefront of change, reshaping how we interact with information.

The utilization of LLMs for content consumption and generation holds immense promises for businesses. They have the potential to automate content creation, enhance content quality, diversify content offerings, and even personalize content. This is an inflection point and great opportunity to discover innovative ways to accelerate your business’s potential; explore the transformative impact and shape your business strategy today.

LLMs are finding practical applications in various domains. Take, for example, Microsoft 365 Copilot—a recent innovation aiming to reinvent productivity for businesses by simplifying interactions with data. It makes data more accessible and comprehensible by summarizing email threads in Microsoft Outlook, highlighting key discussion points, suggesting action items in MicrosoftTeams, and enabling users to automate tasks and create chatbots in Microsoft Power Platform.

Data from GitHub demonstrates the tangible benefits of Github Copilot, with 88 percent of developers reporting increased productivity and 73 percent reporting less time spent searching for information or examples.

Transforming how we search

Remember the days when we typed keywords into search bars and had to click on several links to get the information we needed?

Today, search engines like Bing are changing the game. Instead of providing a lengthy list of links, they intelligently interpret your question and source from various corners of the internet. What’s more, they present the information in a clear and concise manner, complete with sources.

The shift in online search is making the process more user-friendly and helpful. We are moving from endless lists of links towards direct, easy-to-understand answers. The way we search online has undergone a true evolution.

Now, imagine the transformative impact if businesses could search, navigate, and analyze their internal data with a similar level of ease and efficiency. This new paradigm would enable employees to swiftly access corporate knowledge and harness the power of enterprise data. This architectural pattern is known as Retrieval Augmented Generation (RAG), a fusion of Azure Cognitive Search and Azure OpenAI Service—making this streamlined experience possible.

The rise of LLMs and RAG: Bridging the gap in information access

RAG is a natural language processing technique that combines the capabilities of large pre-trained language models with external retrieval or search mechanisms. It introduces external knowledge into the generation process, allowing models to pull in information beyond their initial training.

Here’s a detailed breakdown of how RAG works:

- Input: The system receives an input sequence, such as a question that needs an answer.

- Retrieval: Prior to generating a response, the RAG system searches for (or “retrieves”) relevant documents or passages from a predefined corpus. This corpus could encompass any collection of texts containing pertinent information related to the input.

- Augmentation and generation: The retrieved documents merge with the original input to provide context. This combined data is fed into the language model, which generates a response or output.

RAG can tap into dynamic, up-to-date internal and external data sources, and can access and utilize newer information without requiring extensive training. The ability to incorporate the latest knowledge leads to better precise, informed, and contextually relevant responses that brings a key advantage.

RAG in action: A new era of business productivity

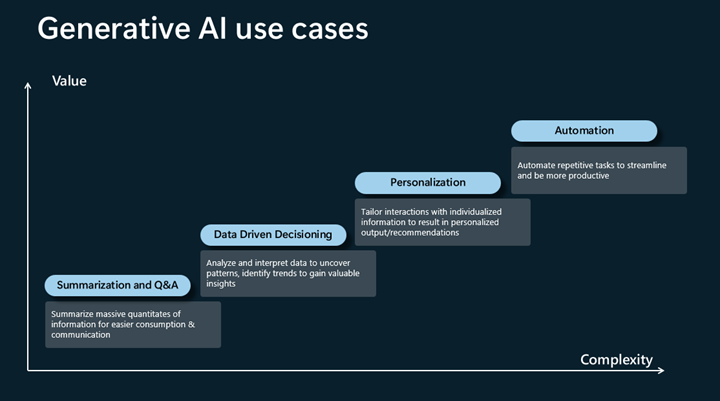

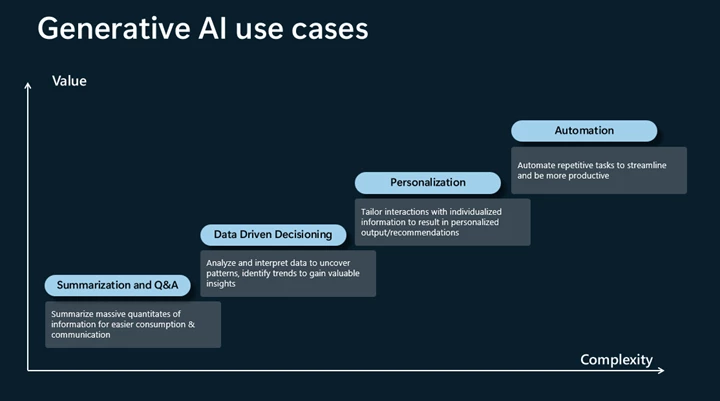

Here are some scenarios where RAG approach can enhance employee productivity:

- Summarization and Q&A: Summarize massive quantitates of information for easier consumption and communication.

- Data-driven decisioning: Analyze and interpret data to uncover patterns, and identify trends to gain valuable insights.

- Personalization: Tailor interactions with individualized information to result in personalized recommendations.

- Automation: Automate repetitive tasks to streamline and be more productive.

As AI continues to evolve, its applications across various fields are becoming increasingly pronounced.

The RAG approach for financial analysis

Consider the world of financial data analysis for a major corporation—an arena where accuracy, timely insights, and strategic decision-making are paramount. Let’s explore how RAG use cases can enhance financial analysis with a fictitious company called Contoso.

1. Summarization and Q&A

- Scenario: ‘Contoso’ has just concluded its fiscal year, generating a detailed financial report that spans hundreds of pages. The board members want a summarized version of this report, highlighting key performance indicators.

- Sample prompt: “Summarize the main financial outcomes, revenue streams, and significant expenses from ‘Contoso’s’ annual financial report.”

- Result: The model provides a concise summary detailing ‘Contoso’s total revenue, major revenue streams, significant costs, profit margins, and other key financial metrics for the year.

2. Data-driven decisioning

- Scenario: With the new fiscal year underway, ‘Contoso’ wants to analyze its revenue sources and compare them to its main competitors to better strategize for market dominance.

- Sample prompt: “Analyze ‘Contoso’s revenue breakdown from the past year and compare it to its three main competitors’ revenue structures to identify any market gaps or opportunities.”

- Result: The model presents a comparative analysis, revealing that while ‘Contoso’ dominates in service revenue, it lags in software licensing, an area where competitors have seen growth.

3. Personalization

- Scenario: ‘Contoso’ plans to engage its investors with a personalized report, showcasing how the company’s performance directly impacts their investments.

- Sample prompt: “Given the annual financial data, generate a personalized financial impact report for each investor, detailing how ‘Contoso’s’ performance has affected their investment value.”

- Result: The model offers tailored reports for each investor. For instance, an investor with a significant stake in service revenue streams would see how the company’s dominance in that sector has positively impacted their returns.

4. Automation

- Scenario: Every quarter, ‘Contoso’ receives multiple financial statements and reports from its various departments. Manually consolidating these for a company-wide view would be immensely time-consuming.

- Sample prompt: “Automatically collate and categorize the financial data from all departmental reports of ‘Contoso’ for Q1 into overarching themes like ‘Revenue’, ‘Operational Costs’, ‘Marketing Expenses’, and ‘R&D Investments’.”

- Result: The model efficiently combines the data, providing ‘Contoso’ with a consolidated view of its financial health for the quarter, highlighting strengths and areas needing attention.

LLMs: Transforming content generation for businesses

Leveraging RAG based solutions, businesses can boost employee productivity, streamline processes and make data-driven decisions. As we continue to embrace and refine these technologies, the possibilities for their application can be virtually limitless.

Where to start?

Microsoft provides a series of tools to suit your needs and use cases.

Learn more

Check out below partner solutions for a jumpstart.

Integration of RAG into business operations is not just a trend, but a necessity in today’s data-driven world. By understanding and leveraging these solutions, businesses can unlock new avenues for growth and productivity.