Generative AI (GenAI) and large language models (LLMs) are disrupting the way we work at a global scale. Snowflake is excited to announce an innovative product lineup that brings our platform’s ease of use, security and governance to the GenAI world. Through these new offerings, any user can incorporate LLMs into analytical processes in seconds; developers can create GenAI-powered apps in minutes, or within hours execute powerful workflows, like fine-tuning foundation models on enterprise data — all within Snowflake’s security perimeter. These advancements enable developers and analysts of all skill levels to bring GenAI to the already secure and governed enterprise data.

Let’s dive into all the capabilities developers can use to securely and effortlessly take advantage of generative AI on their enterprise data with Snowflake.

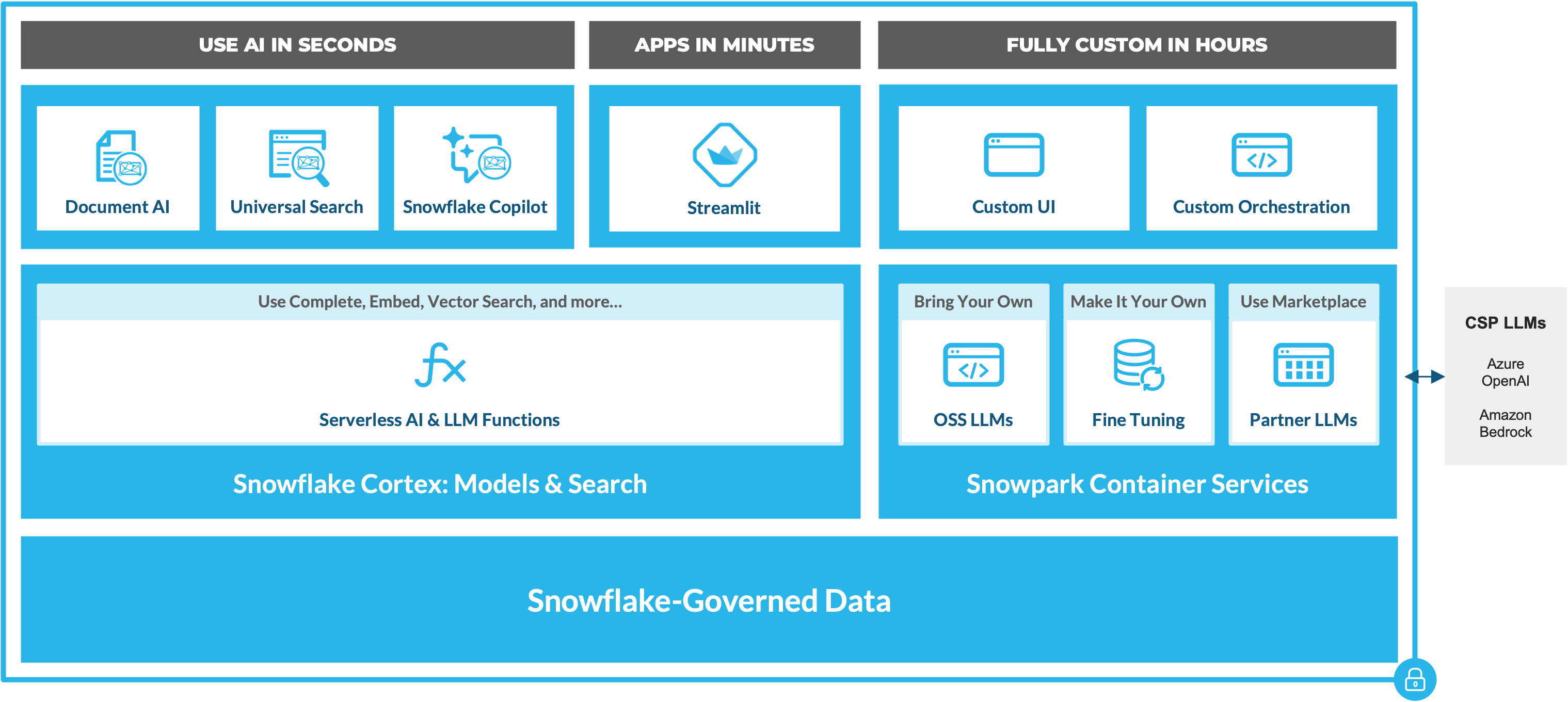

Figure 1. Overview of GenAI and LLM capabilities in Snowflake

Figure 1. Overview of GenAI and LLM capabilities in Snowflake

To securely bring generative AI to their already governed data, Snowflake customers have access to two foundational components:

Snowflake Cortex: Snowflake Cortex (available in private preview) is an intelligent, fully managed service that offers access to industry-leading AI models, LLMs and vector search functionality to enable organizations to quickly analyze data and build AI applications. For enterprises to quickly build LLM apps that understand their data, Snowflake Cortex gives users access to a growing set of serverless functions that enable inference on industry-leading generative LLMs such as Meta AI’s Llama 2 model, task-specific models to accelerate analytics, and advanced vector search functionality.

The power of Snowflake Cortex is not only limited to developers; Snowflake Cortex is also the underlying service that enables LLM-powered experiences that include a full-fledged user interface. These include Document AI (in private preview), Snowflake Copilot (in private preview) and Universal Search (in private preview).

Snowpark Container Services: This additional Snowpark runtime (available in public preview soon on select AWS regions) enables developers to effortlessly deploy, manage and scale custom containerized workloads and models for tasks such as fine-tuning open-source LLMs using secure Snowflake-managed infrastructure with GPU instances. All within the boundary of their Snowflake account. Learn more and stay in the loop.

With these two components, the power developers have to build LLM apps without moving data outside Snowflake’s governed boundary is limitless.

Use AI in seconds

To democratize access to enterprise data and AI and expand adoption beyond a few experts, Snowflake is delivering innovation for any user to leverage cutting-edge LLMs without custom integrations or front-end development. This includes complete UI-based experiences, such as Snowflake Copilot, and access to LLM-based SQL and Python functions that can accelerate analytics cost-effectively with specialized and general-purpose models available via Snowflake Cortex. Learn more here.

Build LLM apps with your data in minutes

Developers can now build LLM apps that learn the unique nuances of their business and data in minutes, without any integrations, manual LLM deployment or GPU-based infrastructure management. To build these LLM applications customized with their data using Retrieval Augmented Generation (RAG) natively inside Snowflake, developers can use:

Snowflake Cortex Functions: As a fully managed service, Snowflake Cortex ensures that all customers have access to the necessary building blocks for LLM app development without the need for complex infrastructure management.

This includes a set of general-purpose functions that leverage industry-leading open source LLMs and high-performance proprietary LLMs to make it easy for prompt engineering to support a broad range of use cases. Initial models include:

- Complete (in private preview) – users can pass a prompt and select the LLM they want to use. For the private preview, users will be able to choose between the three model sizes (7B, 13B and 70B) of Llama 2.

- Text to SQL (in private preview) –generates SQL from natural language using the same Snowflake LLM that powers the Snowflake Copilot experience.

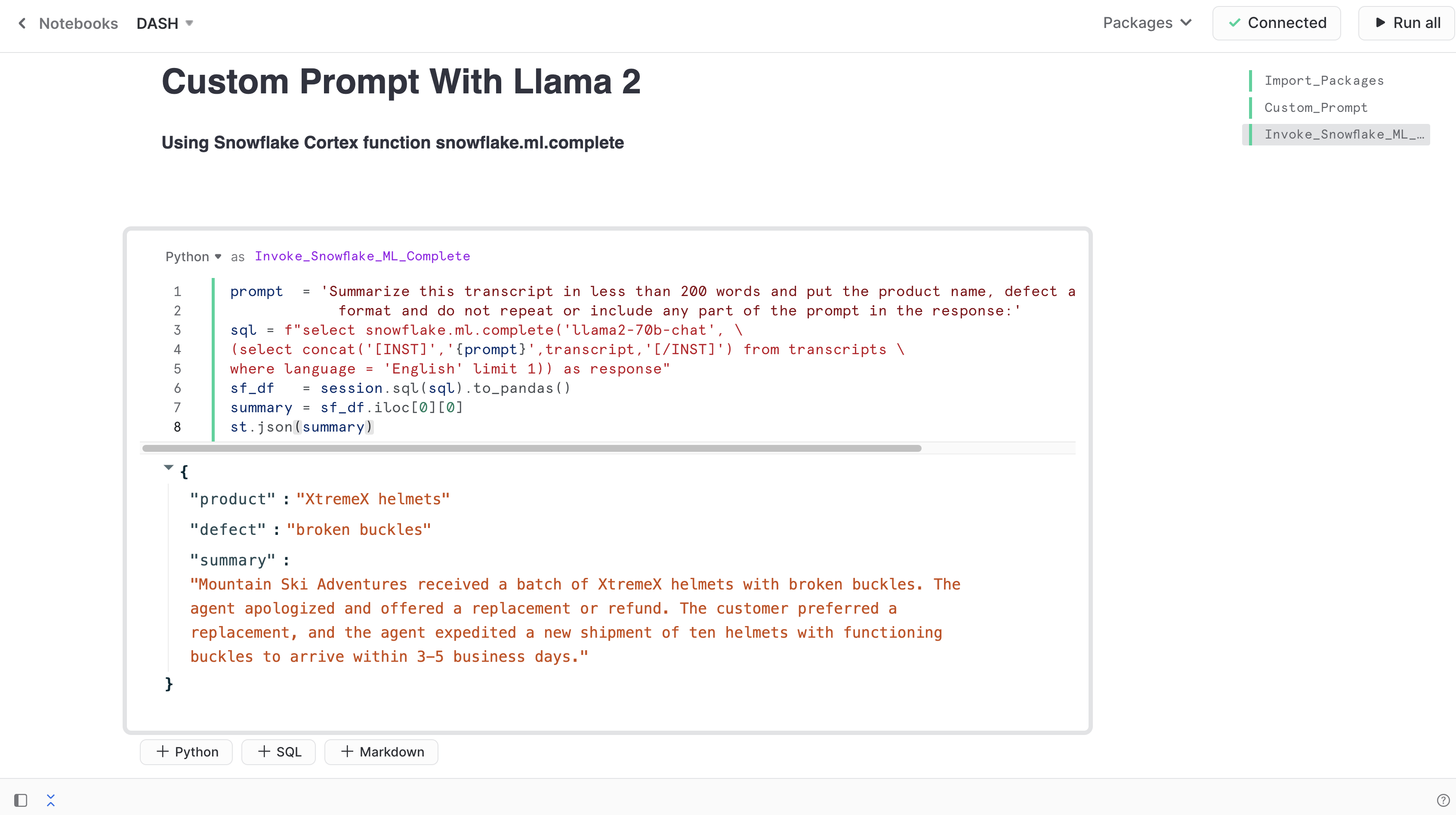

Figure 2. Asking Llama 2 to extract a custom summary from a Snowflake Table from a Snowflake Notebook (private preview)

Figure 2. Asking Llama 2 to extract a custom summary from a Snowflake Table from a Snowflake Notebook (private preview)

In addition, these functions include vector embedding and semantic search functionality, so users can easily contextualize the model responses with their data to create customized apps in minutes. This includes:

- Embed Text (in private preview soon)- This function transforms a given text input to vector embeddings using a user selected embedding model.

- Vector Distance (in private preview soon) – To calculate distance between vectors, developers will have three functions to choose from: cosine similarity – vector_cosine_distance(), L2 norm – vector_l2_distance()and inner product – vector_inner_product().

- Native Vector Data Type (in private preview soon) – To enable these functions to run against your data, vector is now a natively supported data type in Snowflake in addition to all the other natively supported data types.

Figure 3. Using embedding and vector distance SQL functions in Snowsight

Figure 3. Using embedding and vector distance SQL functions in Snowsight

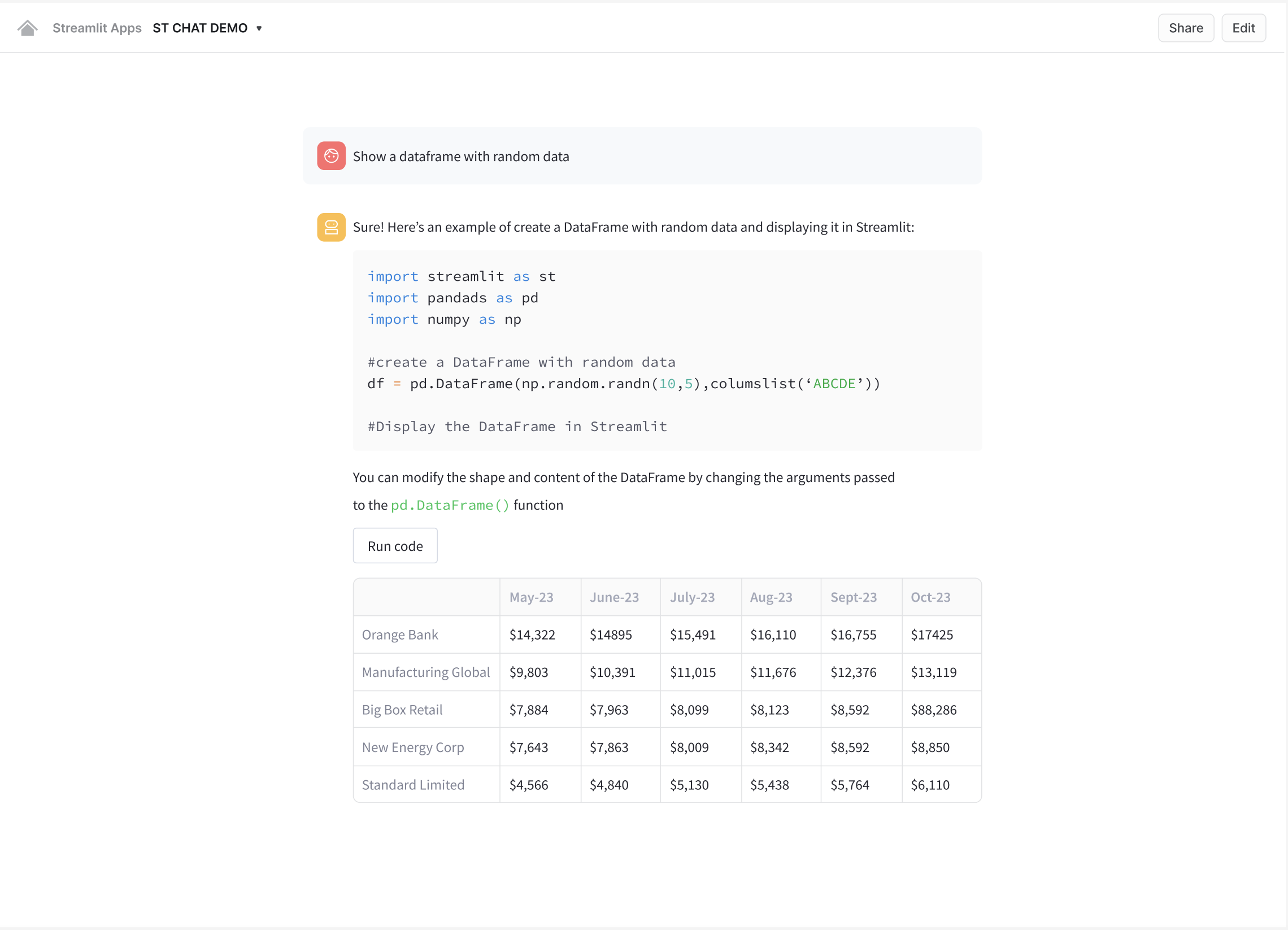

Streamlit in Snowflake (public preview): With Streamlit, teams can further accelerate the creation of LLM apps, with the ability to develop interfaces in just a few lines of Python code and no front-end experience required. These apps can then be securely deployed and shared across an organization via unique URLs that leverage existing role-based access controls in Snowflake, and can be generated with just a single click. Learn more here.

Figure 4. st.chat – Streamlit’s chat component (in development) running in Snowflake

Figure 4. st.chat – Streamlit’s chat component (in development) running in Snowflake

Snowpark Container Services: Deploy custom UIs, fine-tune open source LLMs, and more

To further customize LLM applications, developers have virtually no limits on what they can build and deploy in Snowflake using Snowpark Container Services (in public preview soon in select AWS regions). This additional Snowpark runtime option enables developers to effortlessly deploy, manage and scale containerized workloads (jobs, services, service functions) using secure, Snowflake-managed infrastructure with configurable hardware options, such as NVIDIA GPUs.

Here are some of the other most common adaptations developers can make to the LLM app without having to move data out of Snowflake’s governed and secure boundary:

- Deploy and fine-tune open source LLMs and vector databases: Pairing a GPU-powered infrastructure with Snowpark Model Registry makes it easy to deploy, fine-tune and manage any open source LLM or run an open source vector database such as Weaviate with Snowflake’s simplicity and within the secure boundary of your account.

- Use commercial apps, LLMs and vector databases: As part of the private preview integration with Snowflake Native Apps, Snowpark Container Services can be used to run sophisticated apps entirely in their Snowflake account. This can be commercial LLMs from AI21 Labs or Reka.ai, leading notebooks like Hex, advanced LLMOps tooling from Weights & Biases, vector databases like Pinecone, and much more. Learn more about the latest announcements for developing applications in Snowflake here.

- Develop custom user interfaces (UIs) for LLM apps: Snowpark Container Services provides the ultimate flexibility for enterprise developers developing LLM applications. If they want to create custom front-ends using frameworks such as ReactJS, all they need to do is deploy a container image with their code to make it available in Snowflake.

Resources to get started

Snowflake is making it easy for all users to quickly and securely get value from their enterprise data using LLMs and AI. Whether you want to immediately put AI to use in seconds or want the flexibility to build custom LLM apps in minutes, Snowflake Cortex, Streamlit and Snowpark Container Services give you the necessary building blocks without having to move data out of Snowflake’s secure and governed boundary. For more information to help you get started, check out: